Video

Valor: A Platform- and Model-Agnostic Evaluation Store

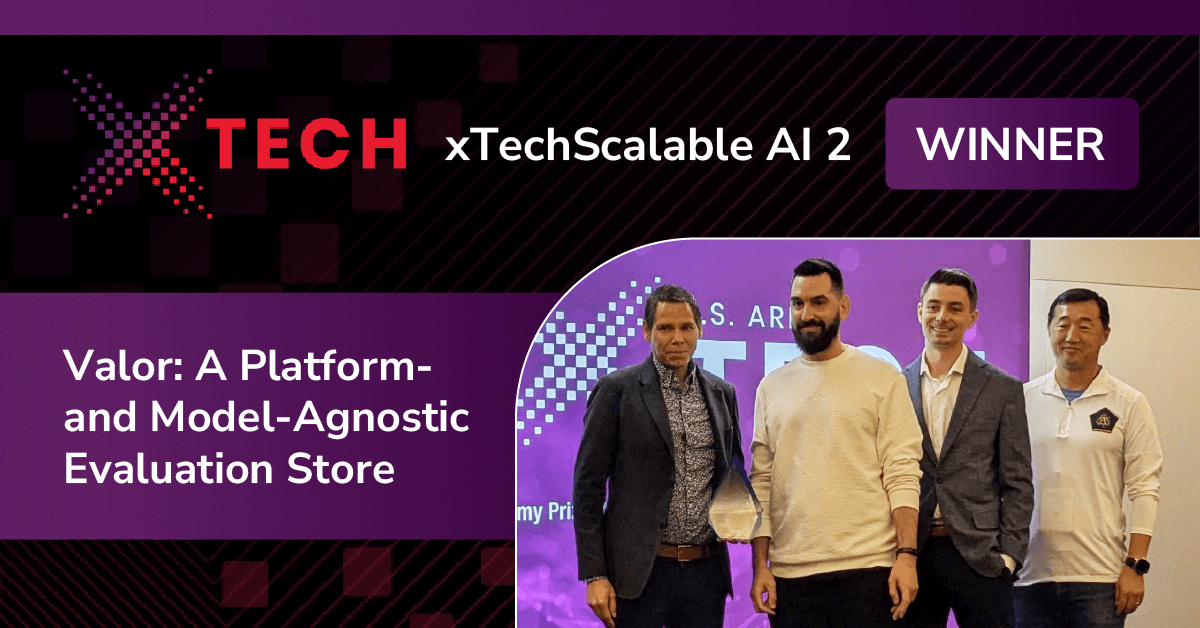

During AUSA, the Striveworks team presented Valor in the final rounds of the xTechScalable AI 2 competition in the category “Scalable Techniques for Robust Testing and Evaluation of AI Operations Pipelines.”

Striveworks Presents Valor at xTechScalable AI Competition

Transcript

Okay, thank you. Hi, everyone. I'm Eric Korman, cofounder, Chief Science Officer at Striveworks. I'll be talking about Valor, which is our platform- and model-agnostic evaluation store.

Just to give a real quick history of Striveworks, we got our start almost six years ago, deploying overseas betting with war fighters, really just seeing what problems they had in this space and trying to build solutions to add value wherever we could. Since then, we've been expanding into larger Army, other organizations within the DOD, commercial spaces and productizing our offerings, but always taking this co-development boots-on-the-ground approach to it.

So, what's the problem we're trying to solve? Well, no surprise, there's been just this huge explosion of AI/ML. So, on this left graphic, we see the number of models over time that've been added to HuggingFace Hub, which is a popular landing point for most open-source models. And so, today, there's well over a million. And then on the right, this is the Linux Foundation's AI and data landscape of just all the tooling available for the whole model development life cycle, from data management to training, deployment, and so forth. Interestingly enough, there's no T&E section. Hopefully we can help change that, but the punchline to this is it's never been easier to get access to models and train your own models, and end users just have this whole sea of models that they have to wade through. And this is compounding the DOD because now there's obviously been a ton of money poured into AI/ML. The sea of models is even bigger, and not only do war fighters have access to the COTS and open-source off-the-shelf models from the previous slide, now there's all these DOD-unique models coming from different programs or vendors.

And so, we hear directly from our involvement in programs such as NextGenC2 and Desert Sentry that these end users are just overwhelmed by trying to wade through all these models that they have at their fingertips. And so, because of this, it's crucial to have T&E to wade through the sea of models, and we really need new tools and processes to answer just this very fundamental question of: What models perform best on the data that I actually care about? And we know it's a fact that AI/ML can offer incredible benefits, especially in terms of saving people time and money through automation. We've seen recently in Europe working some GEOINT use cases that it takes an analyst one day to go through 30 square kilometers of data. But with AI/ML, this takes seconds. But obviously you're not going to get adoptability unless you can trust a model.

And so that's where T&E comes in, and so it's crucial for the deployment of AI/ML. And so, we'll narrow down to four key challenges for T&E. So, the first is model sensitivity. Second is scalability, discoverability. Third is being able to support a diverse ecosystem with different data modalities, architectures, and so forth. And then the fourth, fourth is audibility and reliability, right? You want to be able to trust T&E, because T&E is how you trust models. And so, Valor is our solution to this. It's our open-source evaluation service that makes it easy to measure, explore, rank model performance. Kind of think of it in TOD parlance as a common operating picture for testing and evaluation.

So, how it addresses each of these challenges: So, for model sensitivity where we know, we've seen in the field, that a model that maybe was deployed in one region of the world fail spectacularly in another, and so, you need the capability to really filter down your evaluation data to the pieces that are most operationally relevant and see how your model performs there. We solve scalability and discoverability, because Valor is a central service or can be a central service, so you're not reliant on performance metrics being maintained in a Jupyter Notebook that might go away (or worse) and maybe more commonly as part of a PowerPoint in an Outlook PST file somewhere. It supports a diverse ecosystem. It's agnostic completely of underlying model framework or mechanism that you use to deploy the model. So it doesn't matter if you're using PyTorch or TensorFlow, whatever, all that matters is that you can put data in and get a prediction out and Valor will work with that. And then finally, it's audible and reliable. Not only does it store metrics like accuracy and so forth, but it computes it too. So you have a whole lineage of what exactly went into that number that says your model is 87.7% accurate. And furthermore, the core of Valor is open-source. So, you actually go in the code, see exactly what created a computation.

So, real quick overview of the process of Valor: So, end users can interact with it directly via high-level Python client, or other services can interact via rest API calls. So, Valor was designed to be easily integrable with different model-serving frameworks, different data repositories. Throughout everything we do at Striveworks, we definitely take an ecosystem approach. And the basic workflow is that dataset, metadata, and ground truth annotations get posted to the service. Similarly, model predictions get posted to the service and then end users or other services can then request evaluations, pairing sets of models against sets of datasets together with fairly arbitrary filters that spell out exactly what the data is that you want to evaluate on. These evaluation metrics are then saved and queryable, so they're discoverable. Again, anyone with access to the system can see what's there, and the components that go into evaluation are also saved in the service, so that subsequent evaluations can happen very, very rapidly.

And so yeah, I'll jump into a live demo and we'll show three different ways of using Valor. The first will be integrated into an MLOps platform. The second will be more of a high-code power user who's interacting with Valor in a Jupyter Notebook. And then the third is going to be Valor integrated into an application layer, namely an LLM-RAG workflow, and giving kind of real-time evaluation metrics back.

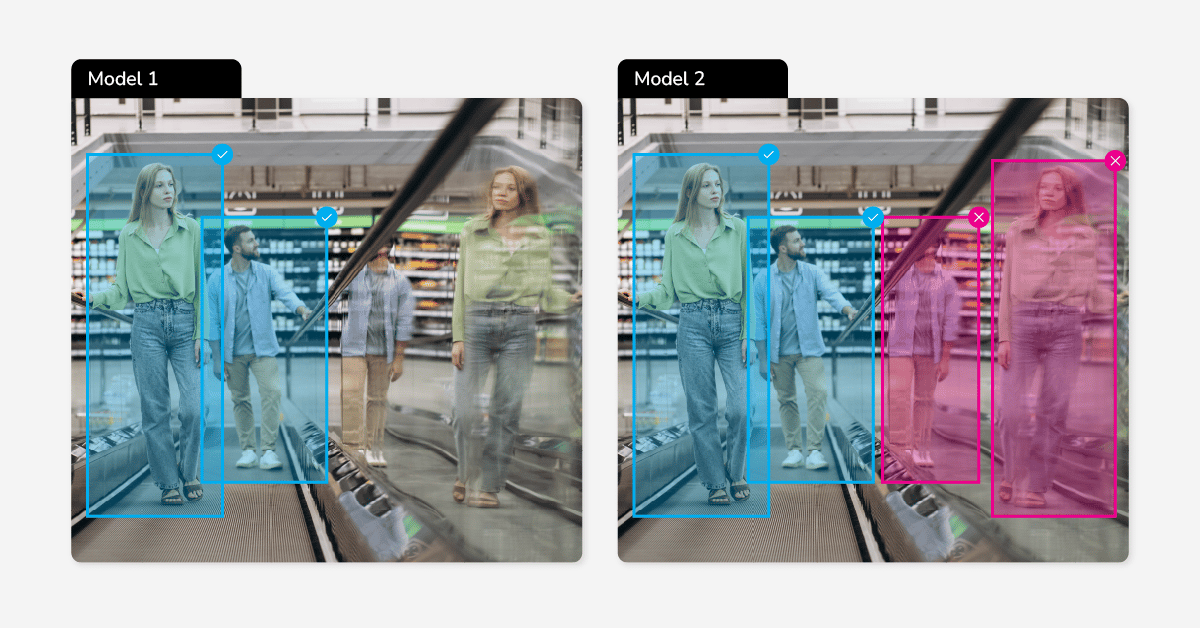

So, this is Valor integrated into an MLOps platform. In this case, the MLOps platform is called Chariot. It's actually Striveworks' core offering, but Valor, as I said, is meant to be integrable pretty generically. And this particular use case I'll go through is of an ATR use case. So, imagine an analyst is trying to detect a specific object of interest in a specific region of the world. At their disposal are a bunch of different models. And so, here we have three models trained on EO imagery. We also have three models deployed on SAR imagery. And we want to see, well for the region I care about, for the object class I care about, what model is most performant, which one should I actually use and trust the results of? So, you can use Valor to calculate the metrics overall. So, this is ATR, it's object detection. Standard metrics there are average precision metrics. Here, we see for this label class—say, naval vessel is what we're interested in. We can get this ranking of models based on, say, average precision, which again is kind of like the standard metric used in object detection. And so, in this case, we see that this Model M1 is most performant overall, and so this is this faster RCM model trained on EO imagery. But of course as I was saying, models can be not robust and their performance can really vary on different subsets of the data.

If I'm interested in a particular region of the world, I want to be able to just draw a polygon on a map and see, well, one model performs best there. And so, in real time, Valor is doing the filtering and recomputing metrics on this most operationally relevant data. And now we see we actually get a different ranking of models, where this other model down here, which is actually operating on SAR data, is more effective for the use case I care about. Valor, besides these standard metrics, they also provide examples we can see for the different models: if it hallucinated something (that is, it predicted something that wasn't there at all), if it missed a prediction, if it misclassified something (so, it got the area right but it said it was a bus but it was really a sedan), and also examples of accurate predictions for the various models.

So, that was a low-code usage of Valor. Here, we'll show a high-code usage of a programmer operating in a Jupyter Notebook. As I mentioned before, Valor has this high-level Python client that enables users to interact with the service programmatically. And so, first step, I'll just connect to the service again. Even though I'm executing these things in these notebooks, all the communication is going to the central service. So, if this kernel dies or this notebook gets destroyed, everything I've done is still existing in Valor, it's discoverable, it's accessible to other end users of the system. And so, this first example, we'll just kind of browse using Valor client to browse what's already been put into Valor. And maybe I'm interested in this wildfire prediction use case. And so, I can grab the corresponding data and model for it and now quickly kind of just browse through, see what the data looks like.

In this case, we have this metadata associated to the underlying data: so, things like day, land area, the sensor that it was collected from, either drone or satellite. And so, maybe for some reason I'm interested in seeing how well this model performs in the summer and for small land areas and for data that came from a drone. And so, Valor supports again this very robust filtering system where I'm saying, okay, I want to evaluate, but just give me the data where the day is between June and September, so summer months, land area less than this user-defined value 500, and the data source is a drone. And so, I can get this high-fidelity evaluation on that specific data. Or maybe I'm interested to see the trends and, well, how does accuracy vary as a function of land area? And so, I can write this kind of simple for loop that's iterating between different bounds of area and use standard tools like map plot loop to get this plot.

I see this nice trend that overall seems like bigger land area corresponds to more accuracy. That's very useful information to know. Now I can have more reason to trust my model if I'm evaluating against large land area versus smaller. Similarly, maybe I'm interested in what data source the model is more accurate on. So, I can do two evaluations where I'm filtering out data coming from the drone, the other from a satellite, and see, okay, this model performs better on data coming from a drone. Maybe I want to invest more of my money in collecting data from a drone versus satellite. Or maybe if I have more satellite, it's easier to get satellite data. Maybe I need to retrain more on satellite data. And so, this example, we were interacting with data that already existed in Valor. Of course, you also want to be able to create things programmatically.

So, in the second example, we show what it looks like for end users programmatically to add data sets and models to Valor. In this case, I'll just go through this toy example with scikit-learn training, a logistic regression model on a toy dataset from scikit-learn. This is the model training. And then Valor has these high-level dataset model objects I can create associated to that model. And then this “Add Ground Truth,” “Add Predictions”—that's adding the ground truth and predictions of that model and dataset to the backend service. And then I can go ahead and evaluate and get standard classification metrics back. Again, these metrics are going to be stored in that central service, discoverable, queryable, obtainable by anyone with access to the system.

So now this final use case is Valor embedded in the application layer. So, this is a simple LLM retrieval augmented generation chat interface. Again, the special sauce here isn't that we can deploy a LLM-RAG pipeline, but it's that Valor is running alongside it to evaluate the pipeline. And so here, I uploaded some fake CONOPS. And so, you can imagine an analyst wanting to ask questions—“What operation took place in July 2024?”—right? The retrieval augmented generation system is going to spit back and answer, “Desert Strike occurred there.” And then, per query, Valor is computing metrics, right? LLM-RAGs are real, they're very useful, but we know they can just flat-out make things up. We also know that the mechanism you use for the retriever can vary depending on the data source. The system is very dependent on that embedding model. And so, you want some metrics to quantitatively let you know how your pipeline's doing.

We support a bunch of metrics in this case, but here we've just plugged in two. One is context-relevant, so that's how relevant is the retriever part able to get data to answer the question. The second is hallucination from zero to one of how much this model hallucinated. In this case, it's saying there was no hallucination, which is good. And so, it's nice to get back this immediate information gauging the performance of the model, but you also want to be able to see how a model is doing in aggregate. And so, Valor is storing over time these values, and here we're applying just a histogram of those values. So that helps to give an aggregate performance measure of your model. It's also helpful for the user to see, okay, how do I put these scores in context? How do they compare to what's expected? Right?

And here, data scientists will look at this and say, okay, context relevance is pretty low. Maybe I need a better embedding model for this rag pipeline. Hallucinations pretty good. I'm happy with that. I'm good with the LLM aspect of this pipeline. That sort of analysis is what this enables.

Okay, so what do we support right now? We support classification for any data modality in computer vision. We support object detection, incident segmentation, command segmentation with the standard metrics, NLP. We support text generation and RAG metrics. So, the more modern LLM-driven ones like hallucination score context relevance, but also classical ones like “sun is blue” scores.

When we compare Valor to other offerings, there's a few key differentiators. So, one is lots of offerings in the T&E space only support, say, NLP or only computer vision. Valor supports both, as well as other data modalities. There's no human in the loop, especially for generation tasks like LLM. Some offerings just set up this battleground where they're comparing outputs using people to do the comparison. That's obviously not scalable, no pun intended. And so you really want it to be as automated as possible. And then another value add for Valor is that it's open-source. That helps with the trustworthiness, audibility of the system. And then finally, it's integrable with an end-to-end MLOps platform already, namely Chariot. And that platform has been deployed both in the commercial space and all ILs, basically, within the DOD.

So we're pitching an 18-month Phase Two SBIR. The major things we want to do are work on that UI. So, the UI showed is real, but it's something that we've just worked on the past month or two. So, we really want to expand that out, get some more visualizations. That example of visualization we saw was just pushed to prod yesterday, so we still want to continue building on that. And then the key thing is integration, right? T&E is useless without rich data sources to evaluate against and a rich set of models. And so, we envision a lot of integration with the model and data set repositories that Linchpin has, as well as the other winners of xTech. We see lots of complementary offerings, which is really great, and we'd be excited to pair with them.

The biggest risks we see are the risks that we've seen throughout our life at Striveworks, which is network access, ATO compute availability on high side, and other networks. These are all risks we've seen and we've overcome, for example, with our deployment on Chariot, which currently sits on IL-7 and is in active use.

Talking a little bit about public opportunities: So, like I mentioned, we already have Chariot deployed a lot across the ILs and various DOD organizations using it. And we see again a huge market year: just in 2024, estimated $2 billion TAM in T&E alone. And this is only going to grow as adaptability of AI/ML increases in commercial opportunities. So, we've already commercialized our dual-use technology, especially previously with Chariot, and we have a lot of paths to bring Valor to the private sector, both direct sales and joint ventures. Again, lots of our approaches in both sectors are an ecosystem-partner approach. Particular industries that we've started making some inroads in or things like healthcare, manufacturing, automotive industry, financial services have a lot of commercial channel sales to get our platform offerings out there. And then here, like Arete, who's part of this convention, have a little vignette of our work that we're doing actively with them. Prospector is an interesting commercial case that's a commercial mining company and so we see some parallels there that's very GEOINT, but in the commercial space, and we're excited about there. Overall, we see the commercial space this year: $16 billion AI/ML T&E TAM.

Yeah, like I mentioned a few times, crucial to AI/ML is really an ecosystem approach. You need to be able to support the diverse data sources that are out there, platform integration, so that your service is actually reaching end users, and ability to deploy in diverse environments. Again, we've been on TS networks, we've been on commercial spaces with Klas. You can see our booth or theirs. We have a ruggedized box that Chariot has been installed on. So, really diverse deployment settings. Thank you.

You said you can customize the metrics, right? With the UI that you're putting together within that, you can tell it what to give you back?

So, within that, you can specify tons of different filters. For example...

Position instead recall?

Exactly. Yeah, those metrics. Also, something we're working on is completely custom metrics, especially on the NLP side where things are a little fuzzier. You might want to change the prompt, and we support that.

And then that Klas box, is that the Voyager?

Yeah, I believe so.

So, for the data and models that are posting in there, that’s highly dependent on the quality of the metadata. Have you seen issues for how you are generating the metadata that would be sufficient to be then able to use for your analysis?

Yeah, that's a really good question. I mean, I will say obviously the baseline standard T&E offerings don't take any metadata in mind, right? You just have the GEOINT case, like this image, and you have the ground truth labels. But actually, we see the opposite problem of being hounded by metadata when we work with our geospatial data providers. I mean, there's all this metadata: distance from the sensor, look angle, all this stuff. It's so structured and kills me as a data scientist that all this information isn't being used in a testing evaluation or model training. But yeah, so we haven't run into a lack of. Yeah.

Do you have some initial cost metrics? What it takes to run this even at a small scale?

Yeah, that's a good question. It has a very small footprint, probably because of this guy. This is Adam Salow, our VP of Product. He's very on-the-ground. Our IL-7 instance, for example, is very resource-constrained, especially because hosting this platform that's deploying models and those need a lot of compute resources. So, our engineering team was hounded by Adam to make this resource as light as possible. So I think, for example, on the production cluster I was demoing on, is maybe four gigabytes of RAM and two virtual CPUs or something like that. That's the order. So very light. That's been a huge engineering effort for us.

So what you have today, a lot of you talked about things you already talked about, like the metrics that Valor is able to determine about the different models.

Yeah.

What are you looking to do? You talked a little bit about it, but what are you looking to do with the SBIR, wanting to advance capability forward there? A couple of different modalities, things like that?

Yeah, that's exactly right. So yeah, I mean a few things in terms of data modalities: We want to integrate with the data offerings there are, and it's going to be a bit shaped by our access and Linchpin and seeing what's out there. But independent of that, there's a ton we want to do. For example, full-motion video is very common. And so there, you get not into these object detection, ATR metrics that are, like, per frame, but what about multi-object tracking metrics? So that's one that we started thinking about. Another thing is time series data or even predicting the next value or regression metrics. Those are simple things that we can add. And so, there's lots of use cases like that. Audio embedding models is another thing that would be good to evaluate, especially with the use of RAG pipelines where that embedding model that retrieves relevant documents is crucial. We want to be able to evaluate those. So, we don't have support yet for embedding models. That'll be a little more work than some of these other data modalities. But yeah, that's where our thinking is now, independent of Linchpin, but we're definitely eager to get on the ground and see what the key use cases are there.

If I had a—is Chariot going to come with this baked into it or no?

That's a good question. Our VP of Product, Adam: The first demo you saw, that was Chariot. And, yeah, that’s a core offering to Chariot. Well, we can also break it up.

Exactly. Yeah, open-source, right?

Yeah, the Valor library is open-source. Yeah, that's exactly right.

So, do you have this—you said this is Valor's approved for IL-6 and 7? Is this actually deployed on any Army system right now? Maybe Air Force Platform? So do you want to talk about where it's deploying? Sorry.

Yeah, so right now to your point of, is it deployed with Chariot? It is deployed with Chariot. It's on the NGA’s advanced analytics platform. So, Chariot has a SWAP approval to be deployed there, so therefore it's JWICS-facing there as well. And then it's also been part of the NextGenC2 deployments as well. That's mainly on IL-5 right now. And Chariot is going through Palantir's Fed Start program right now to get FedRAMP approved. And then we have that as a certification as well.

So, a lot of that is facing the JTIC2S side and that’s tactical. What about the other side of the house of the development, the R&D side? You have any—has it been deployed in R&D spaces? Yeah, for example, let's say I want to select a model that I'm going to use for object detection, or a system I’m designing. I might want to use this to actually evaluate all those different models. Has that been something that you guys have?

Yeah, so it's something we're exploring right now. So, we're talking to C5ISR, like the Atlas program, and some of the computer vision programs there where they're still building those systems. And so, we're working through that as well.

Valor Value Proposition

- Provides rich support for adding and filtering by arbitrary metadata, allowing evaluation on the most operationally relevant data

- A central service built on tried-and-true technology, with a high-level Python API for user interaction; metrics are queryable by dataset, model, and task type

- Agnostic of underlying model framework

- Computes and stores metrics and is open source, making results auditable

Illustrative Example: Detecting Fires With Valor

We are proud to announce that Striveworks was named a winner at the xTech Scalable AI 2 competition, led by the Assistant Secretary of the Army for Acquisition, Logistics, and Technology at AUSA 2024.

Out of 200 applicants, Striveworks stood out for our model-agnostic evaluation service, Valor.

Valor is an open-source solution accessible via the Striveworks GitHub repository.

Related Resources

What is Valor, the Striveworks Model Evaluation Service?

Watch Striveworks cofounder Eric Korman discuss Valor, the first-of-its-kind, open-source AI model evaluation service.

Understanding Performance Bias With the Valor Model Evaluation Service

Valor, the Striveworks model evaluation service, exposes performance bias to give ML practitioners insight into how models perform under real-world conditions.