Summary

In a live training event, a prominent combatant command faced a significant challenge when AI-powered object detection models failed to identify objects of interest, including fallen persons and signs denoting radioactive materials. By working with Striveworks, the command was able to rapidly remediate its models and redeploy them in the field, creating a blueprint for making edge AI dependable in volatile environments.

Data Sensitivities Block Effective AI Model Training

Combatant commands under the US Department of Defense operate in contested environments, where real-time object identification by reliable AI models is a mission necessity. As an extra challenge, security concerns around sharing proprietary information often prevent teams from accessing the data needed to train a model effectively.

When two object detection models—one trained to identify fallen persons, the other radioactive-material signs—were deployed on edge AI devices, they failed to detect their targets. The real-world conditions were too different from the open source data used to train the models. This is what Striveworks calls the Day 3 Problem.

A “Day 3 Problem” Prompts Model Remediation

Fortunately, the combatant command had partnered with Striveworks—experts in rapid remediation of machine learning models, especially in remote and volatile environments. The data team immediately identified the issue as a Day 3 Problem:

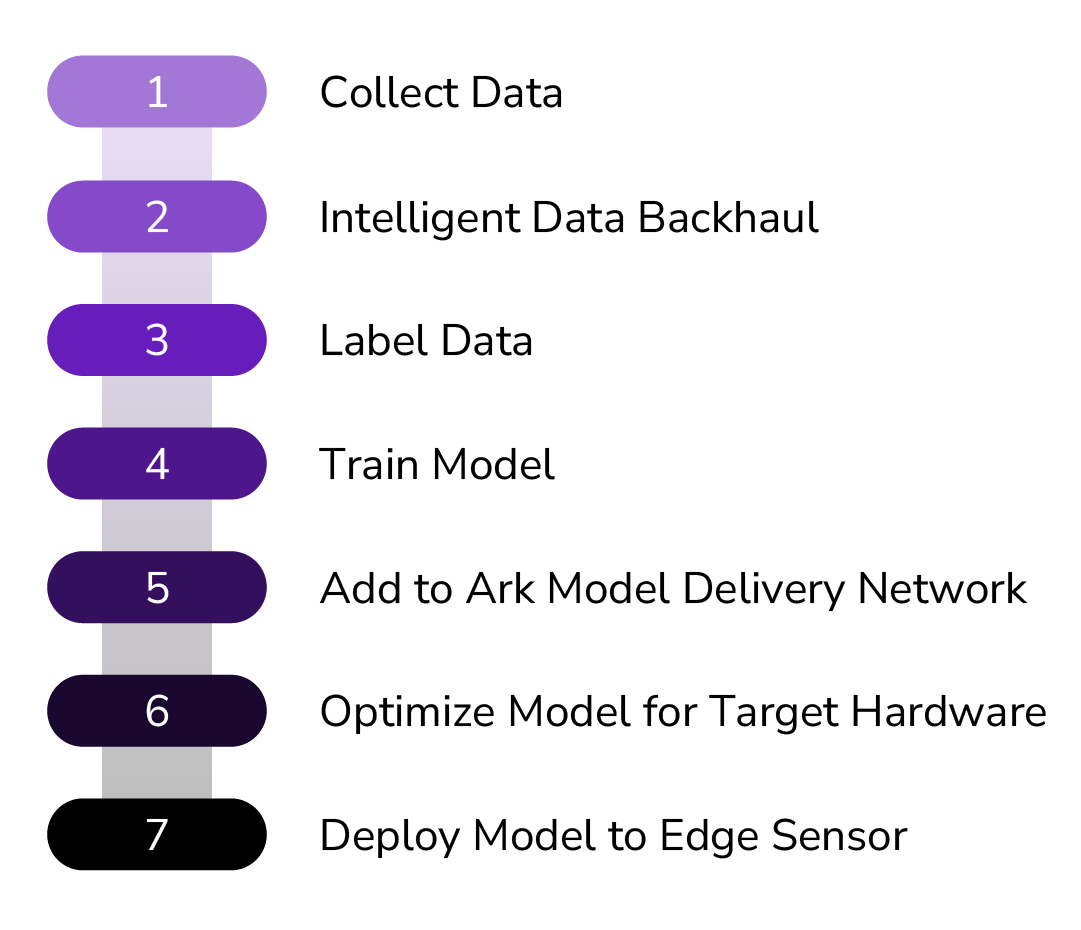

✅ Day 1: You build a model.

✅ Day 2: You put that model into production.

⚠️ Day 3: The model fails, and you’re left to deal with the aftermath.

In response, the team initiated a rapid remediation workflow—a process that involves evaluating and retraining machine learning models to improve performance and then returning them to production in a fraction of the time it takes to train a wholly new model.

Rapid Remediation Restores Performance of AI Models

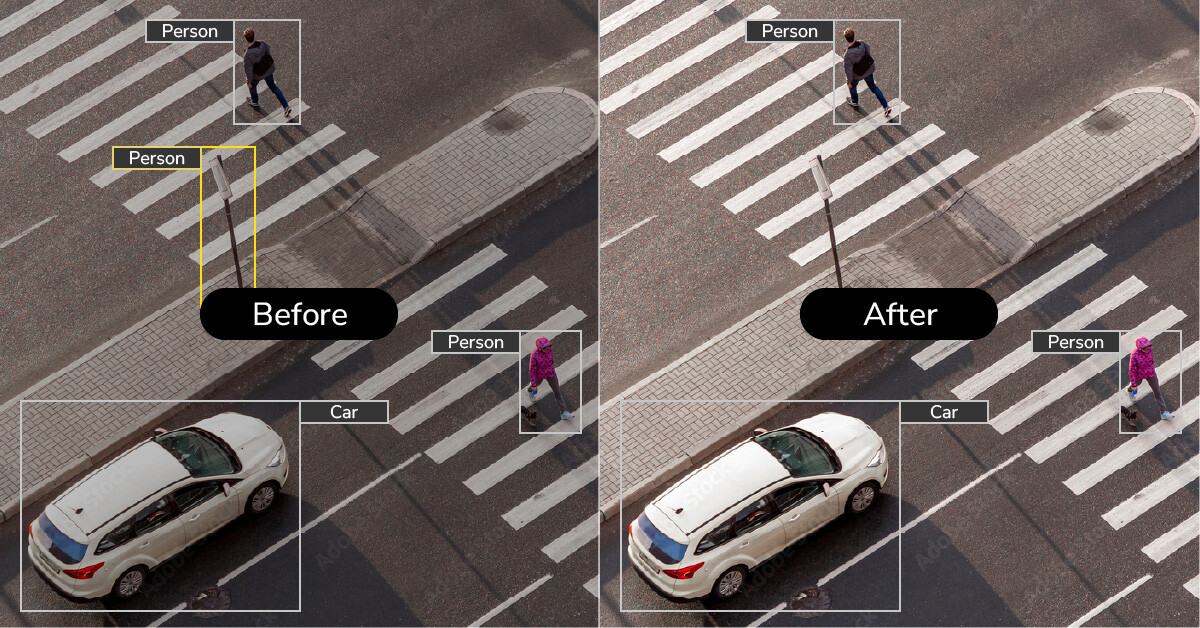

The team’s data analysis soon revealed the cause of failure: The data used to train the models was too narrow. The radioactive-sign imagery consisted of only a single sign staged in various locations on a floor—completely different from what a model would encounter in the wild. Similarly, the fallen-person imagery included many images of people at rest—a very different circumstance than someone injured on a battlefield. This inadequate data caused the models to overfit, failing to generalize to the data encountered during the live event.

Understanding this issue offered a path forward. For the radioactive-sign detector, the team sourced additional imagery from the field and loaded it into the Striveworks MLOps platform. Using the platform’s Annotation Studio, the team augmented the data to produce a more robust dataset within 45 minutes. The team then used the platform’s no-code training wizard to retrain the model in under an hour. Altogether, a fully remediated model was ready for use in the field in under two hours.

For the fallen-person detector, the team retrained its model using the open source Common Objects in Context (COCO) dataset. Although this dataset does not specifically focus on fallen persons, it still proved effective at producing a model to detect them. As with the radioactive-sign detector, the Striveworks MLOps platform had an effective model ready within mere hours.

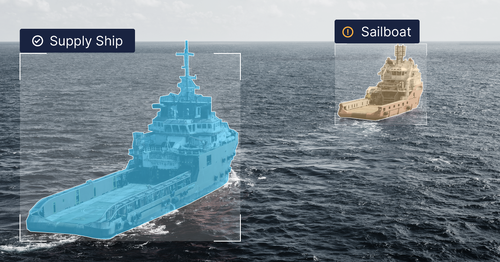

Both models were then deployed on Ark, the Striveworks edge ML platform for multi-data sensing and model serving.

A Blueprint for Using AI in Contested Environments

The remediation process was an immediate success. The rapidly retrained Striveworks models successfully identified all instances of radioactive signs and fallen persons in the field. The quick turnaround—from real-world data collection to results in hours—gave the combatant command a blueprint for realizing dependable edge ML in volatile environments, where real-world conditions change without warning.

Overfitting occurs when an AI model becomes too specialized to the specific data it was trained on, resulting in poor performance when presented with real-world data. It happens when an AI model learns not just the general features of a training dataset but also its noise and minor details.

With this project, the models initially missed the radioactive signs and fallen persons in the field because they would accept only very specific examples that matched the repetitive imagery they had been trained on. Retraining these models on broader data resolved the problem, enabling them to detect the objects of interest.

Related Resources

Model Remediation: The Solution to The Day 3 Problem of Model Drift

Learn about model remediation, the process that overcomes AI model drift to ensure the consistent, high performance of AI models into the future.

Why, When, and How to Retrain Machine Learning Models

Learn why, when, and how to retrain machine learning models to optimal performance using the Striveworks MLOps platform.