The Challenge—and Cost—of Annotating Image Segments

Many object-detection models are trained on data with simple annotations, created by dropping rectangular bounding boxes around an object of interest. It’s fast, easy, and (computationally) less intensive than some other approaches to annotation. And for counting stars in the sky or finding ships in the ocean, that can be enough. But sometimes, you need a model to be able to identify objects with precision—think self-driving cars or tumor diagnosis. In these situations, using bounding boxes to annotate training data may not be precise enough.

This is especially true when the model needs to be able to identify objects that are shaped irregularly, that are found in busy, varied environments, or that may be partially occluded by other objects in the environment. In these cases, you would use polygons to annotate the data, precisely outlining the objects in question in the training data.

The trouble with polygons is that it can take a lot of time and effort to draw them by hand. The more complex the shape, the more points you have to draw and the longer it takes. With larger datasets, the hours can really start to add up. What’s more problematic is that annotators can fatigue and start introducing errors into the exact data that requires precision.

Using the Segment Anything Model to Speed Up Annotation With Polygons

To speed up the process of generating polygons and reduce user error, we need a better system. In 2023, Meta released the Segment Anything Model (SAM). This model is capable of automatically drawing precise polygons over objects within any image or video. Without any knowledge about the content or metadata, SAM can accurately identify the boundaries of most whole objects.

And when it gets the boundaries wrong, you can simply specify which parts are not actually part of the object. In this example, we’re telling SAM that the part of the building to the left of the tree (pink dot) should not be included with the part to the right of the tree (turquoise dot).

For Striveworks users, we’ve integrated SAM into our Chariot platform to make annotating with polygons much more efficient. A task that would otherwise involve drawing lots of complex shapes by hand can now be done with just a few clicks per image.

To annotate an image with polygons in Chariot, just draw a box over the object. This box does not have to be precise.

SAM will automatically detect objects within the box and draw polygons around the object borders. If the polygons are accurate, then you can label and save the suggestions as annotations.

How Can I Annotate Parts of an Object?

SAM works very well for drawing precise polygons over just about any whole object. However, if you need to draw polygons around parts of an object, then the results can be mixed—particularly if there are no obvious borders between those parts.

Let’s look at some examples:

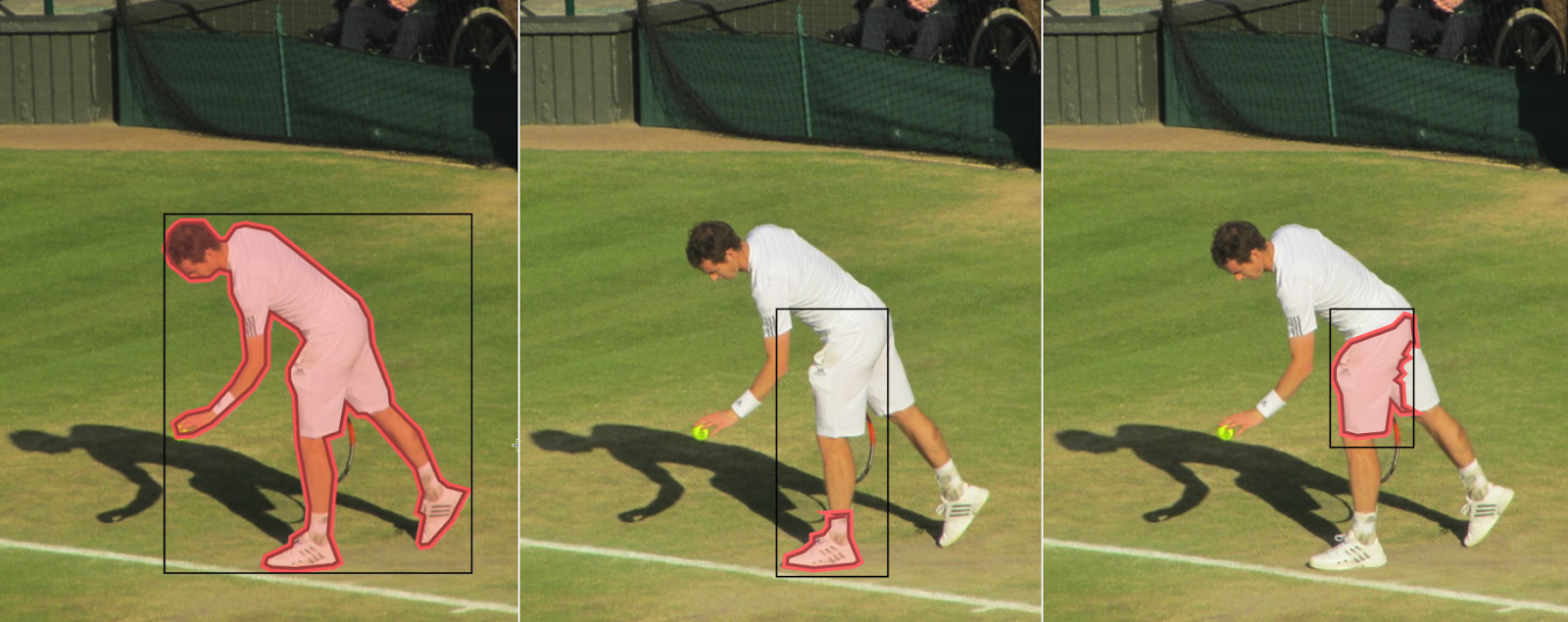

- When outlining a whole person, SAM works well.

- When outlining a leg, SAM only identifies the shoe.

- When outlining the upper left leg of a person wearing all white, it can take longer to tell SAM what you're looking for than it would to just draw the polygon yourself.

To help with this particularly challenging annotation situation—outlining parts of objects—we came up with a workflow that minimizes the amount of manual work involved.

Using Chariot to Efficiently Annotate Parts of an Object

We’ll continue using human body segments in our example, and we’ll begin by using SAM to do what it does best, outlining a whole object—in this case, a whole person.

From here, we can break down this polygon into smaller polygons, one for each part of the person’s body that we need to annotate.

To do that, we select a point along the edge of the polygon to begin making a cut.

Then, we click to draw points to make a line along which the polygon will be cut. To finish the cut, we select an endpoint on the edge of the original polygon.

We’ll repeat this process until the original polygon is cut into all the parts we need.

We’ve found that this workflow speeds things up by about 30% when annotating parts of an object, and, as always, we’re continuing to look for ways to make each step in this process faster.

FAQ

What is SAM?

SAM (the Segment Anything Model) is a segmentation model made by Meta. Segmentation models take images as input, and they output polygons drawn over the boundaries of objects that the model has been trained to identify. Meta released the original Segment Anything Model in April 2023. A second generation with expanded capabilities and improved performance was made available in July 2024. Chariot supports both SAM and SAM 2 for annotating images.

What alternatives to SAM are available?

There are many image segmentation models available for different use cases. Meta has even come out with a family of models called Sapiens for “human-centric vision tasks,” including body part segmentation.

What makes SAM better?

SAM is a powerful, fast, and easy-to-use model, available under a permissive Apache 2.0 license. It is general purpose and provides accurate results across a wide variety of domains.

What are the downsides to using SAM?

While using SAM does have the potential to speed up annotation workflows, it can be computationally expensive to run, particularly if you’re using it frequently over larger datasets.