Embedded AI Model Observability and Auditability

With the rapid adoption of AI and machine learning (ML) across all industries comes a greater need for humans to understand and track these developing tecnnologies. Being able to explain how models perform the way they do, how they comply with guidelines and best practices, and how users can reproduce their results is paramount in any industry.

Striveworks’ model governance helps establish trust in your data, models, and insights. Our approach is to capture and centralize critical information to answer fundamental questions about your AI:

- Where did the data come from?

- Where did the data go?

- How did the data change?

- Who changed it?

Make Your AI Observable, Auditable, and Secure

Striveworks is pioneering AI governance for accurate, effective, and ethical AI models.

Observable ML

Auditable ML

Secure ML

Access Your Full Data and Model Lineage

To make AI auditable and reproducible, teams need to rigorously document all elements of model development and subsequent changes. The Striveworks platform automates this, helping data scientists, decision-makers, and regulators track a model's design, training data, hyperparameters, evaluation metrics, and ongoing performance.

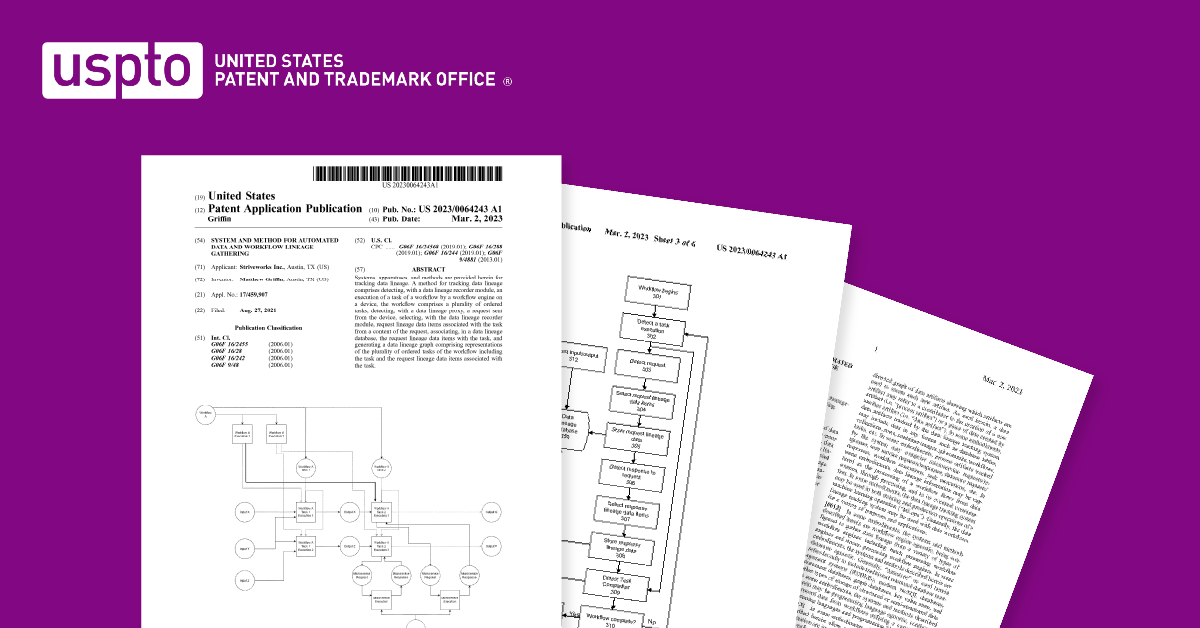

Native to the Striveworks platform, our patented data lineage process logs every action on every data point across workflows. Maintain traceability throughout data preparation, model training, serving, and remediation—building your understanding and trust in the safety and compliance of your AI projects.

- Explore a complete record of model activity—from data ingestion through production monitoring.

- Link all elements of your model life cycle seamlessly—from data ingestion through training, deployment, and production monitoring.

-

Track all dataset and model versions.

-

View model contributors and what they have added for insight into your machine learning operations (MLOps).

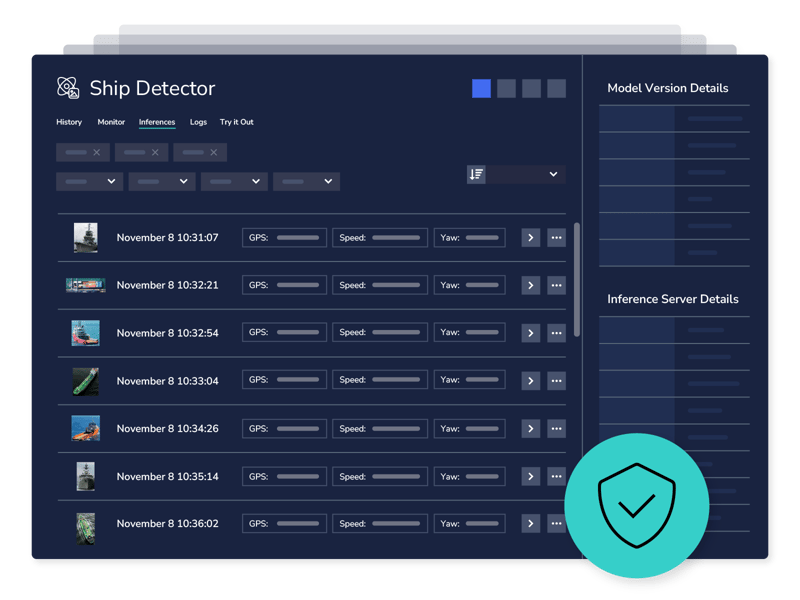

The Striveworks inference store maintains an auditable record of outputs and associated metadata from your production models. At any time, call up historical inferences to confirm their validity and to see how they contributed to organizational decisions.

- Verify the efficacy and compliance of your production models

- Search and filter to find inferences for observation and auditing

- Create subsets of real-world data for deeper testing and evaluation

Not everyone needs equal access to your data. Striveworks’ role-based access controls ensure that your ML workflows remain secure and compliant—facilitating collaboration while maintaining privacy.

These rigorous controls, which are a good process for any organization, are critical for MLOps deployment on private networks, including IL4 through IL7, and in other highly sensitive environments where the strictest security is mandated.

Read what the National Security Commission on Artificial Intelligence says about Striveworks.

Enterprise data governance has always stopped at the analytics layer. Striveworks’ solution for audit and lineage through the analytic process is a game changer—transparency for regulators means enterprises can now use AI where it matters.Matthew Zames

(Former) Chief Operating Officer, JP Morgan Chase

Related Resources

Striveworks Named Exemplar in NSCAI Final Report

Just two years after its founding in 2018, Striveworks had already achieved some of the highest merits that the U.S. government has to offer for its work in artificial intelligence and machine learning.

Make MLOps Disappear

Discover how Striveworks streamlines building, deploying, and maintaining machine learning models—even in the most challenging environments.

Need to Monitor Your Models?

Learn about Striveworks’ tools that give you a full understanding of model performance.